Over the summer, the Department of Homeland Security announced a singular opportunity for a contractor to be a part of one of President Trump’s signature campaign promises: to implement a new system for “extreme vetting” that would keep the country safe from terrorists and other visitors that would do it harm. The president’s policy goal has had a bumpy start during his first year in office. After the first version of Trump’s travel ban was temporarily blocked in court, the White House crafted a new, somewhat watered-down version that it hoped would have a better chance against the inevitable onslaught of legal challenges. But Travel Ban 2.0 has had its ups and downs, too, and is currently only partially in effect.

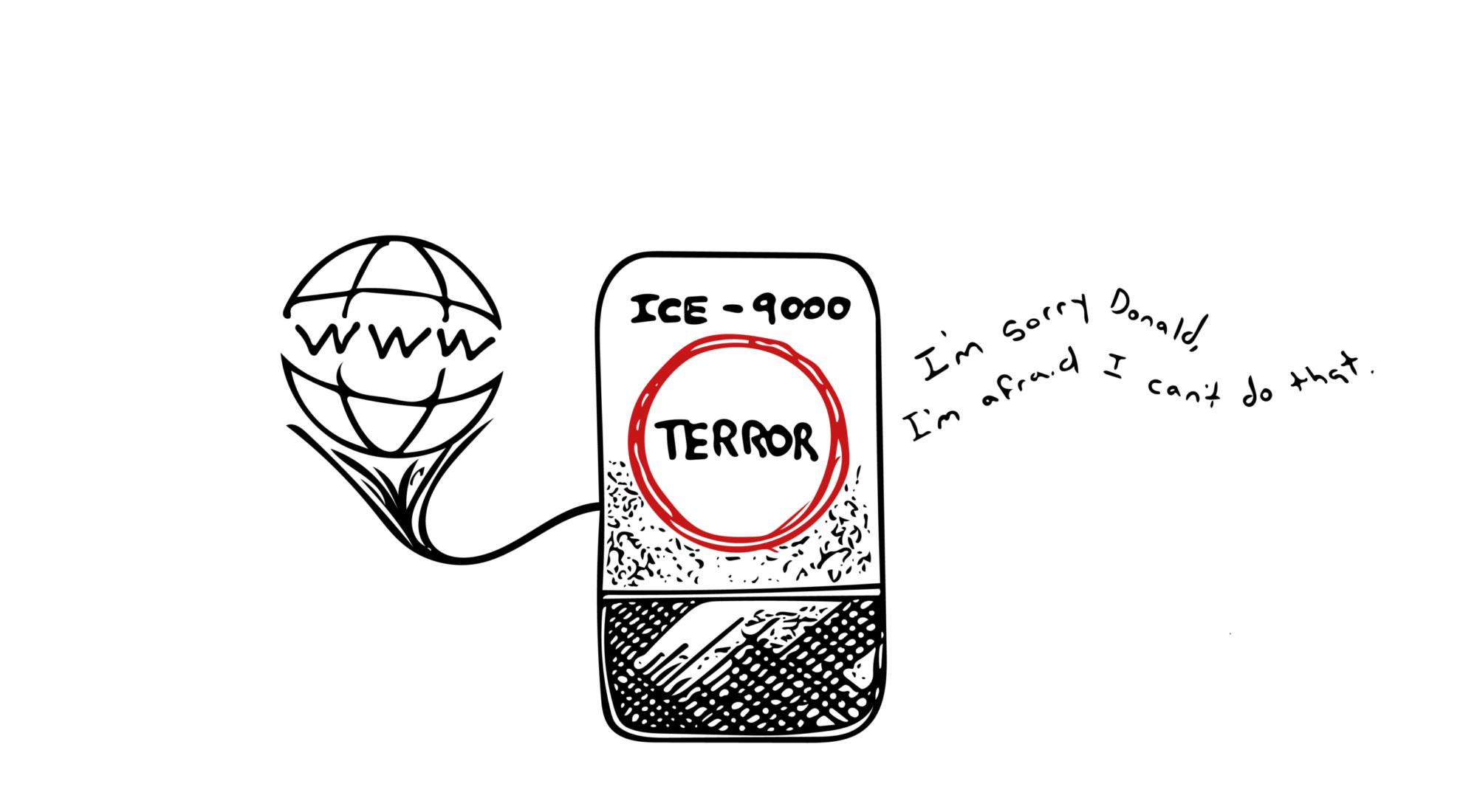

The process DHS set in motion over the summer has drawn far less attention than the president’s travel bans—but, if the government sees it through, it would have a considerably more far-reaching effect. Publicly available documents lay bare the sheer scope and ambition of DHS’s goals for a project it’s calling the Extreme Vetting Initiative. Experts say it also reveals the government’s lack of understanding of what technology is capable of doing.

A document that outlines the government’s goals says that Immigrations and Customs Enforcement, or ICE, is obligated to improve how it evaluates visitors in order to comply with the president’s executive order calling for extreme vetting. ICE wants to know whether applicants intend to commit crimes or acts of terrorism in the United States—a worthy goal that’s in line with the agency’s purpose. But the demands go further: The agency says it also needs to comply with the executive order’s requirement to come up with a process to “evaluate an applicant’s probability of becoming a positively contributing member of society as well as their ability to contribute to national interests.”

To those ends, ICE called on vendors to submit ideas for a system that “automates, centralizes, and streamlines the current manual vetting system, while simultaneously making determinations via automation if the data retrieved is actionable.”

There’s a lot there, so let’s break it down. The government wants the system to be able to screen visa applications at a rate of 1.5 million a year and to evaluate them as quickly as possible. It wants the contractor to come up with at least 10,000 investigative leads a year. And perhaps most significantly, the government is asking contractors to devise a way of scraping information from social media and other publicly available online information in order to determine who poses a risk to the United States, to find individuals’ addresses, and even to investigate cold cases. The system should be able to track nonimmigrant visa holders as they move around the country, and continually monitor their online presence, watching for red flags.

The list of potential online sources is long and thorough: It includes “media, blogs, public hearings, conferences, academic websites, social media websites such as Twitter, Facebook, and LinkedIn, radio, television, press, geospatial sources, internet sites, and specialized publications.”

Last Thursday, more than 50 mathematicians and computer-science experts, plus more than 50 civil-liberties and privacy groups, tried to pour cold water on the government’s expectations. For one, the technology experts wrote in a letter, it’s not possible to build a system that will accurately do what the government wants. Because the frequency of terrorist attacks in the U.S. is very low, they argued, there’s not enough data to be able to create a system that can predict them. “Even the ‘best’ automated decision-making models generate an unacceptable number of errors when predicting rare events,” they wrote.

Instead, the human-rights groups wrote in a separate letter, whatever system the government ends up buying will be forced to rely on proxies to predict an individual’s propensity for violence, or the probability that he or she will contribute positively to society and U.S. national interests. Those proxies, they argued, will almost certainly be reductive and discriminatory. The system might use taxable income, for example, as a proxy for contributions to society, or even race as a proxy for violent tendencies.

Because big-data algorithms don’t need explicit human input to find complex patterns, the contractor wouldn’t necessarily have to manually build in problematic predictors for them to arise. If, for example, a vendor used machine learning—a technology the government says it’s interested in for this project—to try and build a predictive model for individuals’ terrorist proclivities, the black-box algorithm might come up with those proxies on its own, without the humans in charge even knowing. The dearth of data could make the resulting model spew out dangerous false positives, identifying people as risky just because they aren’t rich, or they’re from a certain country or ethnic minority.

“Simply put, no computation methods can provide reliable or objective assessments of the traits that ICE seeks to measure,” wrote the technologists. “In all likelihood, the proposed system would be inaccurate and biased.” It would be a “digital smokescreen” that would allow ICE to deport people at will, wrote Alvaro Bedoya, the executive director of Georgetown Law’s Center on Privacy & Technology, on Twitter.

This tension between the government’s expectations of what technology can solve and experts’ sober view of what tech is really capable of is, by now, a familiar pattern. When Congress was gearing up to pass a bill in 2015 that would encourage businesses to share information about cyberthreats with the government, tech experts and privacy advocates said it wouldn’t work: The firehose of information that would circulate under the bill would actually make it more difficult to identify important threats, technologists said. (This view was corroborated by the DHS, as I reported at the time.) The bill passed anyway.

A similar argument has played out around encryption. The FBI, under then-director James Comey, repeatedly insisted in past years that computer scientists are a bright enough bunch to figure out how to create a digital backdoor that would allow law enforcement access to data while keeping the bad guys out. Again, the technology community said that demand is technically unfeasible: Allowing anyone access means potentially allowing everyone access. Federal law enforcement hasn’t been able to gain much ground on this point.

ICE’s hopes of putting algorithms in charge of securing the border are still a ways from fruition. The government says it’s targeting Fiscal Year 2018 for the program’s launch, and it hasn’t even put out a formal request for proposals yet. On the heels of last week’s letters to DHS, privacy groups are circulating petitions to ground the project before it gathers too much steam, and are targeting the companies that might eventually put in bids to build the system. But the train is already chugging along: An “industry day” this summer focused on the Extreme Vetting Initiative drew so many interested companies that it had to be extended to a two-day affair.

In the meantime, readers without American passports or residency might think twice about tweeting this story. It might be a red flag on your visa application one day soon.